Eval-Framework Documentation¶

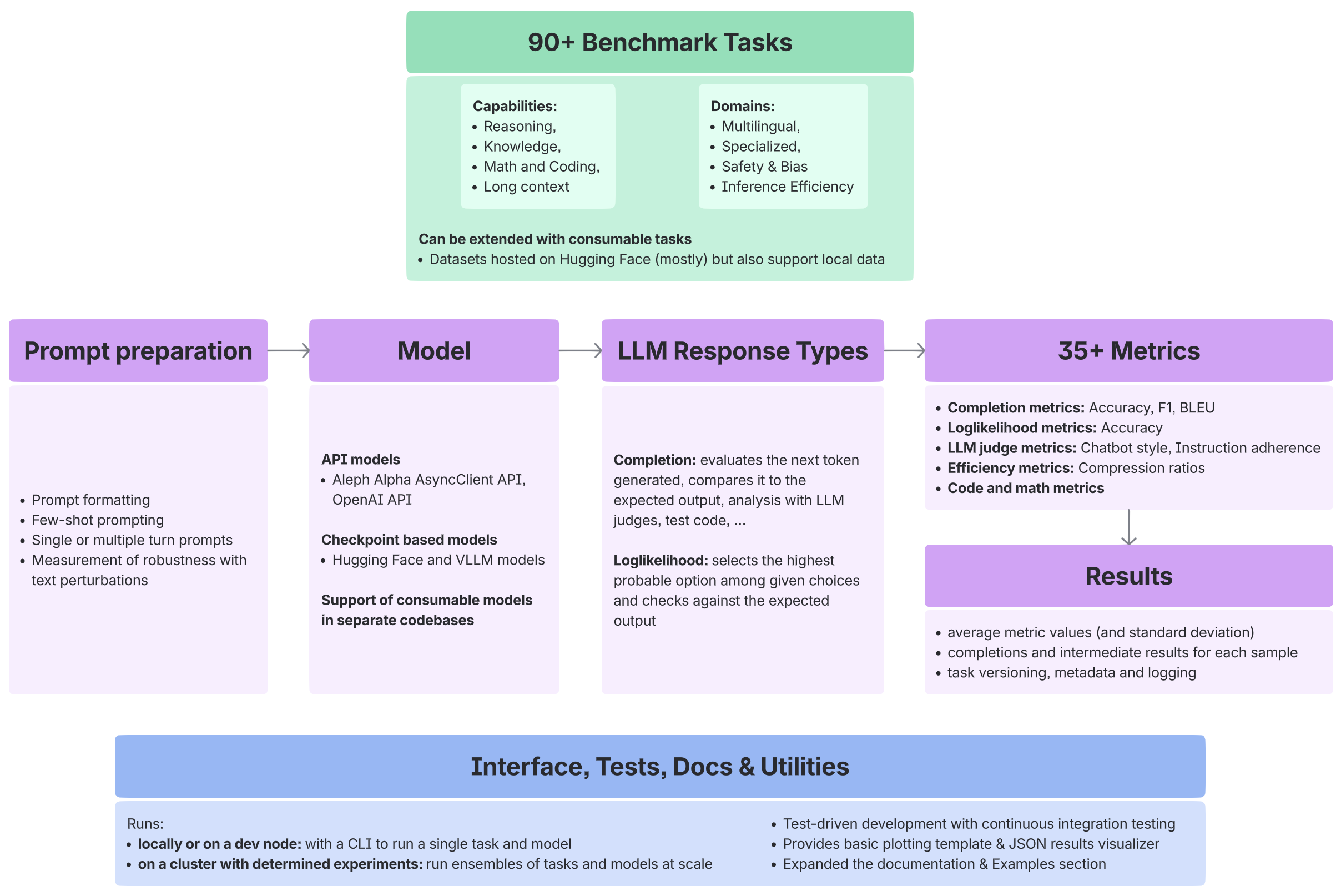

A production-ready framework for evaluating large language models across 90+ benchmarks, covering reasoning, coding, safety, and long-context tasks. The framework provides flexible model integration, custom benchmarks and metrics, perturbation testing, rich outputs, and statistical analysis. It supports local and distributed evaluations, including Determined AI integration.

Key Features¶

Scalability: Distributed evaluation with Determined AI support.

Extensibility: Easily add custom models, benchmarks, and metrics.

Comprehensive Benchmarks: 90+ tasks covering reasoning, coding, math, knowledge, long-context, and safety.

Flexible Model Integration: HuggingFace, custom APIs, and BaseLLM-based models.

Robust Metrics: Completion metrics, loglikelihood metrics, LLM-as-a-judge evaluations, and efficiency metrics.

Perturbation Testing & Analysis: Configurable perturbation types, confidence intervals, and significance testing.

Docker Support: Pre-configured for local or distributed setups.

Quick Start¶

The codebase is compatible with Python 3.12 and PyTorch 2.5. GPU support requires appropriate CUDA dependencies.

Install the library via uv (recommended):

# Clone the repository

git clone https://github.com/Aleph-Alpha-Research/eval-framework/tree/main

cd eval-framework

# Install all dependencies

uv sync --all-extras

Now, you can run a single benchmark locally:

uv run eval_framework \

--models src/eval_framework/llm/models.py \

--llm-name Smollm135MInstruct \

--task-name "MMLU" \

--task-subjects "abstract_algebra" \

--output-dir ./eval_results \

--num-fewshot 5 \

--num-samples 10

Documentation Overview¶

Getting Started

User Guides

- Creating Completion Tasks

- How to Add a New Benchmark to Eval Framework

- Included Benchmark Tasks

- Controlling HuggingFace Upload Results Guide

- Docker Guide

- How to Evaluate HuggingFace Models with Eval Framework

- Creating Loglikelihood Tasks

- Model Arguments

- Overview Dataloading

- Understanding Evaluation Results Guide

- Using Determined

- Utils in

eval-framework - Weights and Biases Integration with Eval-Framework

Contributing Guidelines

API Reference

Citation & License¶

If you use eval-framework in research:

@software{eval_framework,

title={Aleph Alpha Eval Framework},

year={2025},

url={https://github.com/Aleph-Alpha-Research/eval-framework}

}

Licensed under the [Apache License 2.0](LICENSE).

Funding¶

This project has received funding from the European Union’s Digital Europe Programme under grant agreement No. 101195233 (OpenEuroLLM). The contents of this publication are the sole responsibility of the OpenEuroLLM consortium.